Abstract

Volumetric video streaming has become increasingly popular in recent years due to its support of 6 degrees-of-freedom (6-DoF) exploration. There is, however, a shortage of dynamic 6-DoF content suitable for comparing the performance among heterogeneous volumetric video representations. This paper introduces a software toolkit for creating both a dataset of dynamic 6-DoF content in point clouds and a dataset for training and testing neural-based representations such as neural radiance fields (NeRF). Starting with freely available 3D assets online, our software toolkit uses the Blender Python API to generate training and testing datasets for neural-based dynamic volumetric model training. The created datasets are compliant with existing neural-based model training and rendering frameworks. The software can also construct point cloud sequences derived from synthetic dynamic 3D meshes. This further facilitates comparing point clouds and neural-based methods for volumetric video representation. We release the software toolkit along with a rich set of sequence datasets generated in compliance with the permissions granted by the original 3D asset creators. With our toolkit and dataset, we aim to facilitate research from the multimedia systems community to support practical volumetric streaming.

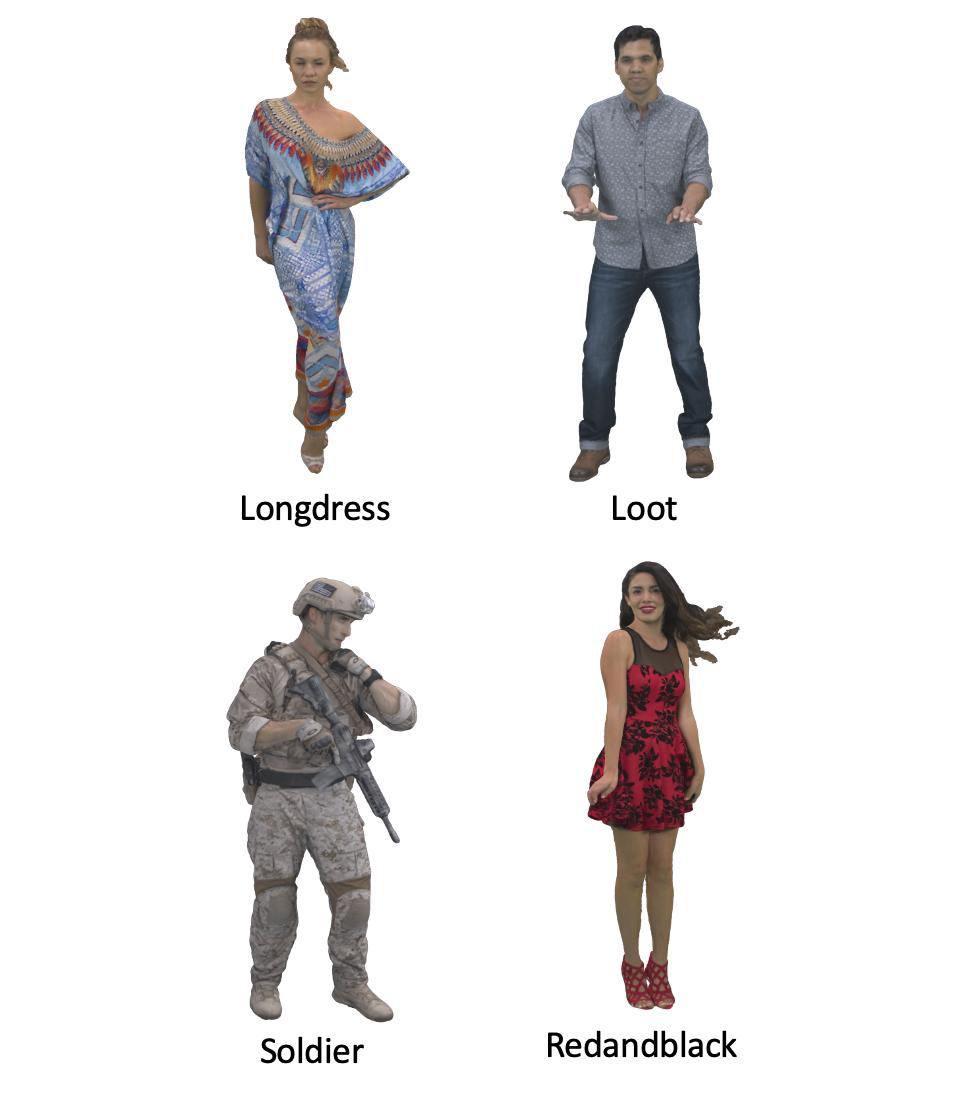

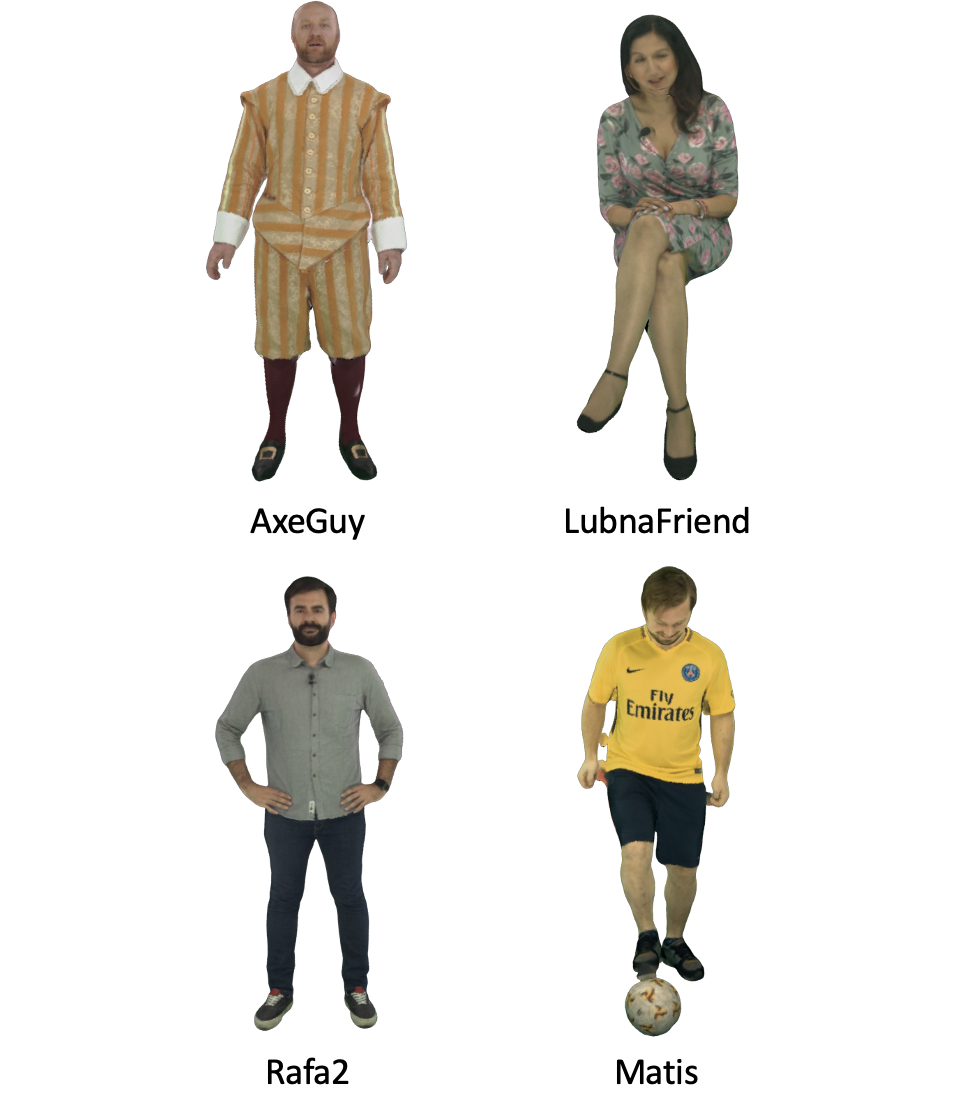

Front-facing images of 12 models.

The Synthetic dataset contains four synthetic animated models edited in Blender, Lego, Pig, Amy and Worker, as shown on the left. Longdress, Loot, Redandblack and Soldier come from 8iVFBV2 dataset, shown in the middle. The rest four models, AxeGuy, LubnaFriend, Rafa2 and Matis, are from vsenseVVDB2 dataset, shown on the right.

BibTeX

@inproceedings{zhu2024dynamic,

title={Dynamic 6-DoF Volumetric Video Generation: Software Toolkit and Dataset},

author={Zhu, Mufeng and Sun, Yuan-Chun and Li, Na and Zhou, Jin and Chen, Songqing and Hsu, Cheng-Hsin and Liu, Yao},

booktitle={2024 IEEE 26th International Workshop on Multimedia Signal Processing (MMSP)},

pages={1--6},

year={2024},

organization={IEEE}

}